At the start of this study, I had no clue what the interface of machine learning and music looked like. It was a very murky, mysterious area that seemed cool but also tiring and complicated to get involved in. However, with the guidance of Professor Peck and online professor Rebecca Fiebrink at the University of London, in the first half of this course, I learned the tools and skills needed to create a new electronic instrument.

In learning this niche skill, I also happened to learn a great deal about machine learning in general: neural nets, classification, regression, and more. I learned about the different algorithms used in different types of machine learning, what they are good/bad for, and how best to implement them. Then, I learned about the most important factors to consider when attempting to work with these machine learning algorithms in tandem with sound. I learned about the most important factors to consider when creating a training data set, how to set up a good system that accounts for silence/error/outliers in an audial data set, and I learned how to work with space and time in ways that are becoming essential in the world of electronic music (great article on that here: http://www.jstor.org/stable/41389143?seq=1&cid=pdf-reference#references_tab_contents).

The online course that I was enrolled in with the University of London gave me essay prompts to fill out detailing creative ideas about how I could implement these different systems that I was working with into my own performances. Having to put these ideas into the context of my own artistic life, along with the incentive of our lab to think of new creative ideas for my final project, pushed me to come up with cool, exciting possibilities for the future. If you read the “Fun Implementations” section, you will see some of the many ideas that I considered (including musical moon shoes!). I even thought of ways to implement my new found skills on campus via a musical-engineering interactive installation that was planned to be built in the Dana Engineering building.

Finally, however, I decided on creating a neural net that takes in live training data and (once trained with a variety of different pitches), will put different effects on the user’s voice based on the frequency (Hz) of the pitch that is being sung. For me, for example, I would use this tool to implement a vocoder that would sing high harmonies with me when I sing low frequencies, low harmonies when I sing high frequencies, and general dimensional enhancement/vocal doubling when I sing mid-range frequencies. This would create a more even, enriched sonic environment in my songs and make them easier to understand, as a problem that I sometimes run into is the fuzzy range/inappropriate use of vocoders when I start singing very high and the vocoder is still trying to produce pitches above me, or when I sing very low and the vocoder is still producing pitched below me. Once this patch was finished, I planned to move onto a larger project that would intake many data points (i.e. volume/pitch/EQ/reverb) from a variety of songs by a chosen particular artist in a training data set, and place different effects on microphone patch to make the user sound more like their favorite artist.

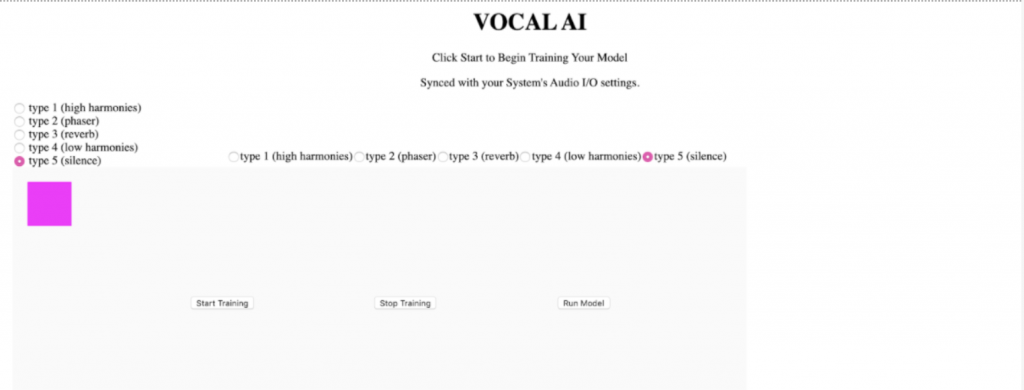

Unfortunately, the second half of the semester was compromised by coronavirus and I was not able to finish up my projects on campus. Nonetheless, the second half of the semester I began actually implementing all that I learned. With the initial machine learning tools that I was using no longer there to fall back on (Wekinator, PD, etc…), I had to learn about running web servers, using ML5/P5/CSS, and begin putting together a real, usable interface. The final figure inserted above this reflection shows what the page currently looks like. I have coded a neural net that it is currently hooked up to. I feel that I have had tremendous growth and created some amazing projects over this semester, and that I am very proud of the work that has been done/happy to move forward with it. Specifically, I think that I will be able to go forward with this productively next semester as I learn more about computer music programming in javascript in my class with Professor Botelho, as well as more about HCI in HCI with Professor Peck and about programming signals/systems in BMEG 350 with Professor Tranquillo.

Overall, I am content that I challenged myself and gave myself a project that I will have to take forward with me into the future/employ others to work on going forward. I think that this project is extremely worthwhile and that I have/am/will continue to learn a great deal from it. As I said before, I came into this semester knowing not much about machine learning or the development of electronic instruments, and now I can confidently say that I believe in my ability to implement and follow through with both. This skill will be huge for me in my career going forward in the tech and music industry, and I am extremely grateful that this opportunity presented itself when it did.