- Much like we learn about in math class, Feature Vectors show us the importance in the change in the value of an object over time

- Think Chunk with slots

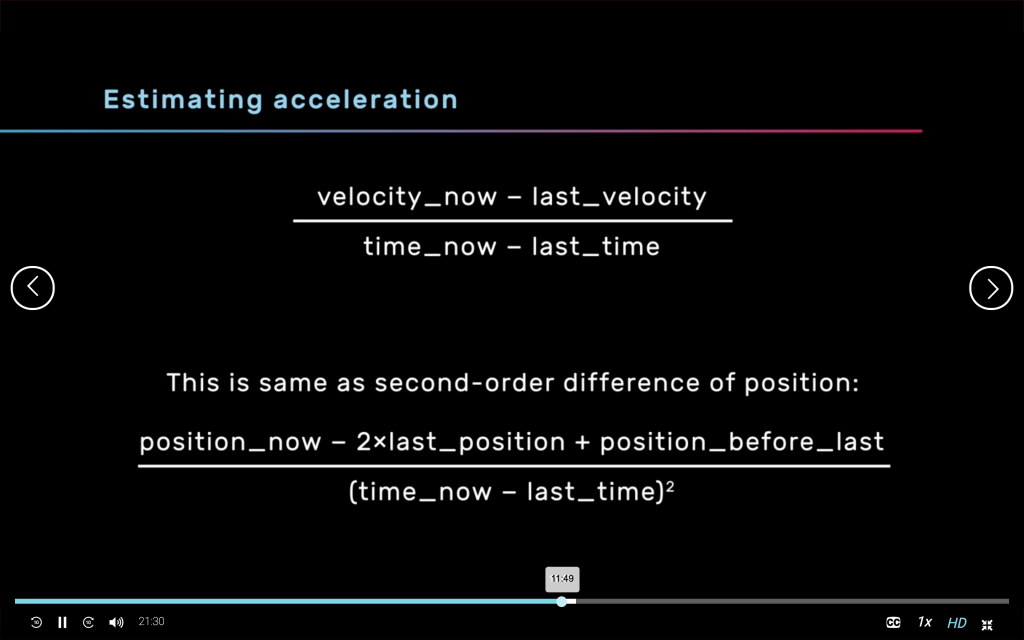

- 1st order difference = 1st derivative = velocity of sound

- 2nd order difference = 2nd derivative = acceleration of sound

- When training a machine on a certain set of audio data, you can pick when the inputs start/stop reading

- Audio is only meaningful to us when in changes over time

- Audio features

- Choose a moment in time

- Look at last few dozen/thousand moments leading up to this moment

- Pick moment later in time

- Spectral Centroid is a measure of timbre!!

- Tells us about the balance of the sound in the spectrum

- High frequency content = high spectral centroid = brighter sound

- Low frequency content = lower spectral centroid = warmer sound

- MFCCs (mel frequency cepstral coefficients)

- The mel frequency cepstral coefficients (MFCCs) of a signal are a small set of features (usually about 10-20) which concisely describe the overall shape of a spectral envelope.

- DSP thoughts:

- Use a real filter on your input voice, but if you can’t:

- Usual filter equation:

- Average of last two sound data points is output

- The input helper acts as a filter

- You can CODE a FILTER!

- Look into better filter equations

- Different equations work well with different hardware

- Open CV

- Video analysis

- Use Fiducials

- A fiducial marker or fiducial is an object placed in the field of view of an imaging system which appears in the image produced, for use as a point of reference or a measure. It may be either something placed into or on the imaging subject, or a mark or set of marks in the reticle of an optical instrument.

- Stick fiducials on yourself in front of a camera?? Maybe make ‘em look cool to add to your aesthetic as an artist

- Hard cascades

- Cascading is a particular case of ensemble learning based on the concatenation of several classifiers, using all information collected from the output from a given classifier as additional information for the next classifier in the cascade. Unlike voting or stacking ensembles, which are multi expert systems, cascading is a multistage one.

- Cascading classifiers are trained with several hundred “positive” sample views of a particular object and arbitrary “negative” images of the same size. After the classifier is trained it can be applied to a region of an image and detect the object in question. To search for the object in the entire frame, the search window can be moved across the image and check every location for the classifier. This process is most commonly used in image processing for object detection and tracking, primarily facial detection and recognition.

- Movement

- Compare pixels from one frame to the next

- Give meaning to the magnitude of the pixel changes in velocity/acceleration/drama/etc…

- Use open frameworks and open CV

- How fast to send/process inputs?

- Cool to play with when u want choppy vs smooth sounds

- Using too many new examples that are super similar or the same makes training take FOREVER and DOES NOT HELP

- Send features at a slow rate that are fairly different when you are creating your training data set

- Tell your software specifically when your motion begins/ends

- Can do this with a simple trigger event i.e. pressing a button on your Wii-mote

- downsample/upsample your buffers so that they are always the same length when looking at a certain feature

- After a trigger or a set period of time, take data in a buffer and classify it

- Dynamic Time Warping

- Matching gestures over time

- How does one curve transform onto another?

- Match width

- Makes sure features are not matched to similar points that occur at drastically different points in time (maintain the curvature of gesture)

- Training examples will be downsampled to 10-time points and evenly dispersed throughout the longest example

- Hidden Markov Model (HMMs):

- Uses hidden states

- I.e. drawing a circle: bottom, left, top, right

- Probability distributions for features over each state

- Bottom → left → to top → right → bottom

- Uses hidden states